Data governance | What it is and how to use it

Data governance is a set of principles and standards that dictate the organization and unification of data within an organization. Institutions achieve high caliber governance by establishing data management systems, policies and processes.

The goal of data governance is to ensure data accountability, security, usability and continuity through the data’s lifecycle. Organizations benefit long-term by achieving these objectives.

Data quality plays a major role in data governance. Organizations use Smarty for address cleansing, validation and enrichment. Try out any of the address verification APIs we offer.

In this article we will cover:

- Data governance benefits

- Data governance versus data management

- Data governance body of knowledge

- Data quality

- Standardization & deduplication

- Data enrichment

Data governance benefits

Every organization already practices some form of data governance. Yes, even receipts in trash bags at the storage unit next to the office even qualifies as data governance. Well, it qualifies as bad data governance at the very least.

Some organizations perform effective data governance. Other organizations with dumpster fires for databases could appear on “Hoarders: Data Edition”.

Data governance is what Marie Kondo would have developed had she been raised by a database administrator and a data analyst. Effective data governance is difficult but “sparks joy” because of what it will give back to you.

By following sound data governance principles, organizations benefit by:

- Limiting risk of data leaks

- Improving data quality

- Controlling levels of data accessibility

- Harmonizing data by breaking down data silos

- Preventing data errors from entering the system

- Lowering data management costs

- Better data driven decisions

- “Spark joy” every time you access data

Good data governance requires goals, systems, procedures, data stewards and more. Most of all, governance requires full corporate buy-in. We want to provide an action-oriented guide to get the ball rolling.

Data governance & data management

Data governance and data management are often conflated, but they are far from the same. Data governancedictates the rules, processes, accountability and enforcement. Data managementexecutes the data governance processes.

So, to help you remember, data governance is Darth Sidious giving orders and data management is Darth Vader executing those orders.

For example, Data governance would mandate the merging of all data into a single database. It could also say that certain employee roles would have access to particular data.

Data management would need to actually figure out how to unify the data. Data management would also need to find ways to give appropriate access to the roles.

Data governance body of knowledge

So, your 4.5 petabytes of data spread across 47 data warehouses and 116 garbage bags isn’t working and you are ready to bump your data governance game? Smart! I wasn’t going to say anything but your data was beginning to smell.

There is a lot to be done, but in the end it will all be worth it because your data is gonna be singing like a canary. The first thing to be done is to identify the key knowledge areas of data governance.

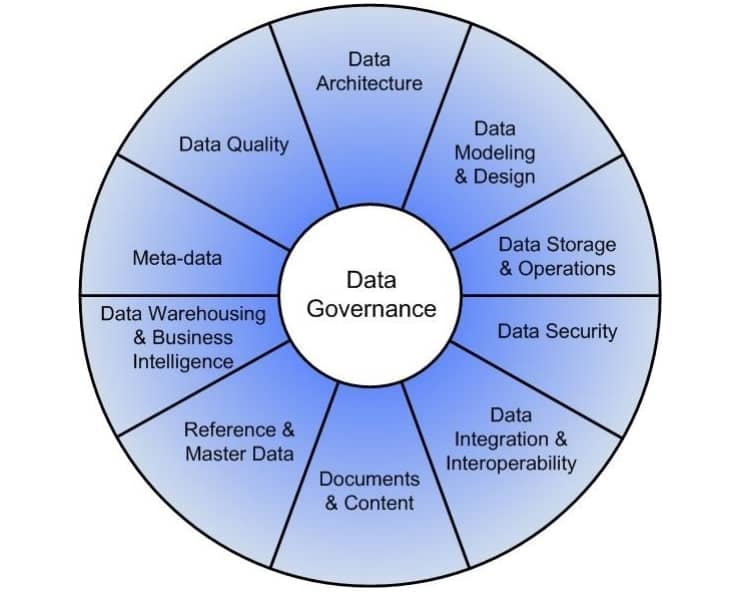

The Data Management Association (DAMA) has identified 10 knowledge areas to start you on your journey.

Imagine a wheel with data governance shown in the center. These 10 knowledge areas surround and support that center hub of Data governance.

The center hub

- Data governance – The planning, oversight, and control over data management and data resources. Data governance is concerned with processes and systems.

Surrounding knowledge areas

- Data architecture - The structure of data and resources within enterprise architecture. These don’t come in any particular order. Many steps need simultaneous implementation.

- Data integration & interoperability - Systems must efficiently share, create and exchange data. Define the who, what, when, where and why of data sharing inside and outside the organization. Can you create a customer record in the support department and revise it in sales? Can you correct the record in IT and add to the record in finance on the same day?

- Data modeling & design - This covers analysis, design, building testing and data maintenance. This may start with a flow chart of how data originates and flows through your systems. Include larger decision-making and business process as they impact the new data origination. Data models are then used to organize and document the interconnectedness. This includes the who, what, why, when and how of data storage.

- Data quality - The most inexpensive place to heighten data quality is when it first enters the system. Define what should be in place to ensure the highest possible quality data at the point of entry.Data validation services that only allow for valid data entry reduce human error. Address validation and address autocomplete software can fulfill these needs for addresses data. Once stored, how is the data monitored and measured to ensure the fidelity? Determine what processes and standards are necessary to maintain and increase data quality. More about data quality.

- Data security - Most data leaks originate as a result of improper data access permissions. Your organization should ensure appropriate access to data and while maintaining suitable privacy. (source for data breach statistic)

- Data storage & operations - The technical management and storage of structured physical data. Database administrators (DBAs) are the key players for this knowledge area.

- Data warehousing & business intelligence - Where and how you warehouse data and determine levels of accessibility. How easy is it to access data for reporting, analysis and business decisions?

- Documents & content - Data is often stored outside structured databases. Data is also stored in documentation, articles, marketing materials and in print to name a few. As an organization evolves much of this data will become obsolete.Systems for indexing and cataloging this unstructured data help to identify outdated material. System indexes are necessary for interoperability in structured sources.

- Meta-data - Meta-data is data about data. This may cover the how, what, when where and why of data collection. Meta-data also describes categorization, order, versions, permissions and data relations. Collect and store as much appropriate meta-data as possible. As organizations evolve, metadata that holds little value may become imperative.Control meta-data should remove redundancies and inconsistencies. Make considerations for integrating, controlling and delivering meta-data.

- Reference & master data - Master data is the core data in an organization. There are usually three types: places, parties and things.

- Places: geographies, locations, sites, zones

- Parties: customers, suppliers, employees

- Things: products, hardware, vehicles

Some organizations may also have master data about contracts, warranty or other assets. Reference data is a subset of master data. Reference data clarifies and categorizes data between systems. Examples of reference data would be categories, status codes, product codes. Most things found in drop down menus are reference data. What type of master and reference data is crucial for you to track and organize?

Data governance is not a “one-and-done” initiative. Governance is an ongoing process that requires commitment, buy-in and trust from stakeholders. Each of the knowledge areas outlined above will help you to know where to begin.

Data quality

Data quality is the validity, timeliness, accuracy, completeness, uniqueness, and consistency of data. Data quality is among the most important knowledge areas in data governance.

Decisions within an organization determine business outcomes. These decisions come from the information that originates from data. So, poor data quality leads to wrong or risky business outcomes.

Sometimes poor data quality leads to the discovery of the Americas. More often, it leads to rocket explosions, pandemics and jail time for executives.

In this light, data quality will impact the long term success of an organization. So, data quality should be a top priority.

Data governance is a business function, and not a function of technology. This means that governance must start with top level management buy-in. From there you can form a committee with the following goals:

- Break down data silos - Data silos is data that is accessible by only one department or groups of individuals. Data silos form due to competition, inconsistent data access, company growth or mergers. Data silos lead to incomplete understanding, poor customer service and collaboration breakdowns. In short, data silos are the primary enemy that impair data quality.

- Improving data quality at entry - The number one reason for poor data quality is human error at entry. Common enemies are fields, typos, misspellings, input errors and duplicates.Data quality at the point of entry can improve with training but humans are still prone to error. Implementation of automated systems can make data entry more “dummy proof”. Companies that use validation and standardization software can correct common mistakes.

- Improving existing data quality - There is already data in your system. Implementation of data quality automation at entry will fix new data going in. But it won't fix the bad data already in your system. Oftentimes, you can use the same data entry standardization tools in existing databases.

- Obtaining more meta-data - Gathering more information about existing customer data aids in business intelligence.For example, having a customer’s address leads to a wealth of information. County, school zones, and ZIP Code affluence data can be game changing for your company. There are hundreds of data points available depending on your needs.

Data is not static and quality degrades with time. So it is important to revisit your quality goals and practices for long term viability. Create systems and processes that will update data to prevent data degradation.

Address standardization, deduplication & validation

Often, the same or similar data enters a system or database. Common cases of this in a customer database might be:

A customer purchases from your company from several marketplaces.

A customer cancels an account, and creates a new account under a different email address later on.

Your company acquires another company. But customer overlap needs to be rectified before merging databases.

Many customer records for the same person may require you to merge or flag records for deletion. The question is, how can you identify the records to merge? The first step is to pick an attribute from the database you want to deduplicate.

An ideal choice under many circumstances are users’ physical addresses. The first step is to standardize all the addresses into the same format.

If a customer entered the following street address on the first visit to your site:

“4 Privet Dr, Oakworth, keighley”

And then purchased some months later using the following address:

“Number 4 Privet St, oakworth, Keeghly BD22 7JF”

A basic matching algorithm may view these as unique addresses. Then, the database would then keep both records instead of merging them.

Address standardization is formatting an address to match the address format of the local postal authority. Address verification is the process of checking for an address in the national address authority database.

If you were to standardize and verify both addresses mentioned before, both versions would become identical. Both would look like this:

“4 Privet Drive, Oakworth, Keighley BD22 7JF”

Matching records is now easy-peasy. You can delete, merge or flag the duplicates as desired. Your data warehouse will thank you. At the same time you are standardizing to the same format and ensuring that the addresses are real. Smarty offers address validation software that perform exactly this function.

Data enrichment

Data enrichment is the process of taking known data and merging it with third party data sources. The third party data is then appended to the known data to improve business intelligence.

Address data enrichment is often included with address validation services. For example, feeding an address list through our validator means your addresses will be:

- Returned in a standardized format.

- Verified that they are real and mailable.

- Enriched with 55 data points associated with the address

Smarty provides geocodes, congressional districts and county FIPS codes to name a few. There are another 55 data points to help you gain real business intelligence. And that is data you can take to the bank.

Here is a demonstration of our bulk address validation tool. Watch us get 55 data points for ten addresses in less than twenty seconds:

By the way, the tool can validate, standardize and append data for 50,000+ addresses a minute.

You can also check out this bulk address validation quick-start video for additional details on how to use the tool.

A powerful use case for data enrichment is geocoding. Geocoding is the process of turning an address into its corresponding latitude and longitude coordinates. Smarty offers rooftop accurate geocoding in the US and much of the world. You can then use those coordinates to unlock near limitless information such as distance to flood zones, schools, delivery routes, franchise zones and a figurative ton of additional data.

Once you plot all your customers on a map, you can use this to start to identify patterns of user behavior. If you know that a group of customers used a coupon at a franchise location, you use geocoding to plot those customers on a map. You may find that they all are coming from particular neighborhoods. You can then target areas like that in future campaigns to help improve ROI.

Conclusion

Data governance allows you to take greater control of your data. Governance provides you with systems, rules, and processes to increase your data intelligence. Data quality represents an important component of the data governance principles.

Once you are ready to start improving your data quality by deduplicating, standardizing, validating and enriching your address data, you can start using our single address verification tool or our lightning fast address validation APIs.